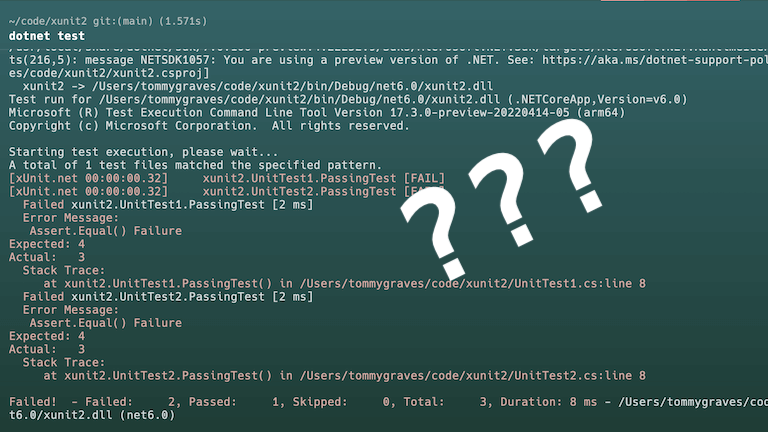

Flaky tests inconsistently pass or fail without any code changes. While many engineering teams strive to eliminate flaky tests, they're inevitable in many projects for a variety of reasons ranging from race conditions to non-determinism like time-based tests.

Unfortunately, flaky tests result in lost productivity due to engineers needing to retry their builds until they get lucky and have all of the flakes pass. The issue compounds with the number of flaky tests. If each flaky test has a 95% chance of passing, but there are 10 of them, there's only a 60% chance that all 10 tests will pass. Frequent manual retries distract engineers and result in wasted CI compute time and cost.

While several strategies exist for mitigating flaky tests, dynamic quarantining is often overlooked and underutilized. Quarantining means continuing to run the test, but preventing it from failing the build. It's one of the strategies that Google uses to deal with flaky tests.

Mitigation strategies include: A tool that monitors the flakiness of tests and if the flakiness is too high, it automatically quarantines the test. Quarantining removes the test from the critical path and files a bug for developers to reduce the flakiness.

Test flakiness is an important problem, and Google is continuing to invest in detecting, mitigating, tracking, and fixing test flakiness throughout our code base.

We'll describe several common strategies that engineering teams can combine when deciding how they want to handle flaky tests.

#Do Nothing

Teams can choose to do nothing with flaky tests, letting the team feel the pain of the flakiness as a source of motivation for addressing the flakiness. It's a noble approach and can be effective, but it'll typically break down at a certain scale. Depending on the nature of the flakiness, forcing all flakes to be fixed is impractical and likely doesn't provide a high enough ROI relative to other engineering efforts.

#Retrying

Another common approach is configuring automatic retries of tests. Often this is done as a uniform configuration across a test suite. Unfortunately, using a consistent number of retries for the entire test suite can be challenging to calibrate. If the number of retries is too low, it may not provide sufficient mitigation for flakiness depending on the number of flaky tests, the probability that each fails, and how those values compound. If the number of retries is too high, it can lengthen build times and result in wasted compute when a build has legitimate failures, especially if a change is made that causes a large number of failures. A large number of retries can also add substantial noise to the build output if the retry library prints each failure rather than only one of them.

The best approach with retrying is to apply a high retry count to only the tests which are known to be flaky and to intelligently print the test output so that the logs aren't filled with duplicate failures. Maintaining the retry count and setting it per flaky test can be tedious though, and not all testing frameworks or retry libraries can suppress duplicate output.

#Skipping

One common approach is to skip flaky tests until the source of flakiness can be addressed. The disadvantage of skipping is that it's effectively the same as deleting the test, losing the coverage that the test is intended to provide. It can also be a slow mechanism to mitigate the problem, as skipping is often implemented in code, requiring a pull request to be opened and merged and all other pull requests needing to be rebased to remove the source of flakiness.

#Quarantining

Quarantining continues to run the flaky tests but prevents them from failing the build. In contrast to skipping, if quarantining is implemented with an additional mechanism for detecting when a quarantined test begins to fail consistently rather than intermittently, it enables engineers to continue to capture the value of the test (detecting broken functionality) without paying the cost of the flakiness (lost engineering productivity). Admittedly, quarantining with permanent failure detection could result in a bug slipping through depending on the commit velocity and deployment frequency. However, a bug slipping through is a guarantee if the test is skipped entirely, whereas quarantining preserves some chance of catching the bug.

#Detection

Any strategy for handling flaky tests relies on accurate detection. Especially if skipping, engineers need to be careful that a test failed due to flakiness and not another cause. Being overly eager to conclude that a test is flaky can easily result in a bug making its way to production.

The best detection mechanism is to identify a test that failed but passed when retried on the same underlying code. Detection should look at the underlying commit sha or specifically look at retried builds in a CI system to identify flakiness. Although some tools label any failed test on main as flaky with the assumption that the test passed on a feature branch, it's not always a reliable method to determine flakiness, especially if branches can be merged without being up-to-date.

#Dynamic Mitigation

Statically mitigating flaky tests can delay the mean-time-to-resolution of flakiness. The pull request to flag the test has to have a build pass and be merged, and all other outstanding pull requests need to be rebased to incorporate the mitigation. This can result in a backlog of pull requests and a corresponding thundering herd problem once the mitigation is in place. It's also possible that additional flakiness relates in delays merging the PR to quarantine, further keeping the engineering team blocked from merging. In contrast, dynamic mitigation results in immediate resolution without rebasing being required. Dynamic mitigation can also be a useful tool if temporarily employed while waiting on static mitigation in code.

#Captain

We've incorporated these techniques into Captain, an app that provides engineering teams with tools for build and test performance and reliability. Captain features include:

- accurate flaky test detection – some other test analytics providers only indicate failure rates, without truly looking for flakiness from retries

- dynamic quarantining for instant mitigation

- permanent failure detection, indicating if a quarantined test goes from intermittently failing to permanently failing

We're also currently implementing supporting for targeted retries, allowing a retry policy to intelligently apply to known flakes. See more in the Captain docs for your test framework.

Check out Captain, and if you're interested in chatting about builds and tests, send us a note at [email protected]

Related posts

Test results can be so much richer

Software engineers spend incredible amounts of time running tests and interpreting test results. Unfortunately, we often neglect the DX of test results.

Captain 1.10 Generally Available, Open Source Release

Boost engineering productivity with Captain: an open-source CLI that eliminates flaky tests, optimizes test suites, and provides detailed failure summaries.