Software engineers spend incredible amounts of time running tests and interpreting test results. Unfortunately, we often (woefully) neglect the DX of interacting with test results.

#Console logs are not quite up to the task

The first interaction with test results for most engineers is the console log. It's an old but tried-and-true approach that offers raw, unfiltered data. However, the abundance of information can often be overwhelming, making it difficult to efficiently extract the most valuable insights.

Console logs lack the intuitive discoverability required for effective troubleshooting. They’re simply streams of text, without structure or metadata that could make them easier to understand or navigate. As a result, engineers often find themselves lost in a sea of cryptic messages, dedicating unnecessary time to deciphering the log instead of addressing the actual problem.

Don’t get me wrong: console logs have their place. If you run your test framework and a single test fails with a sensible and straightforward stack trace emitted at the end of the test run, there’s perhaps no better way to present that information than via stdout. But in complex test suites running a multitude of types of tests and encountering a variety of failures, the terminal simply isn’t up to the task of helping you comprehend your test results.

#Test frameworks are really fragmented

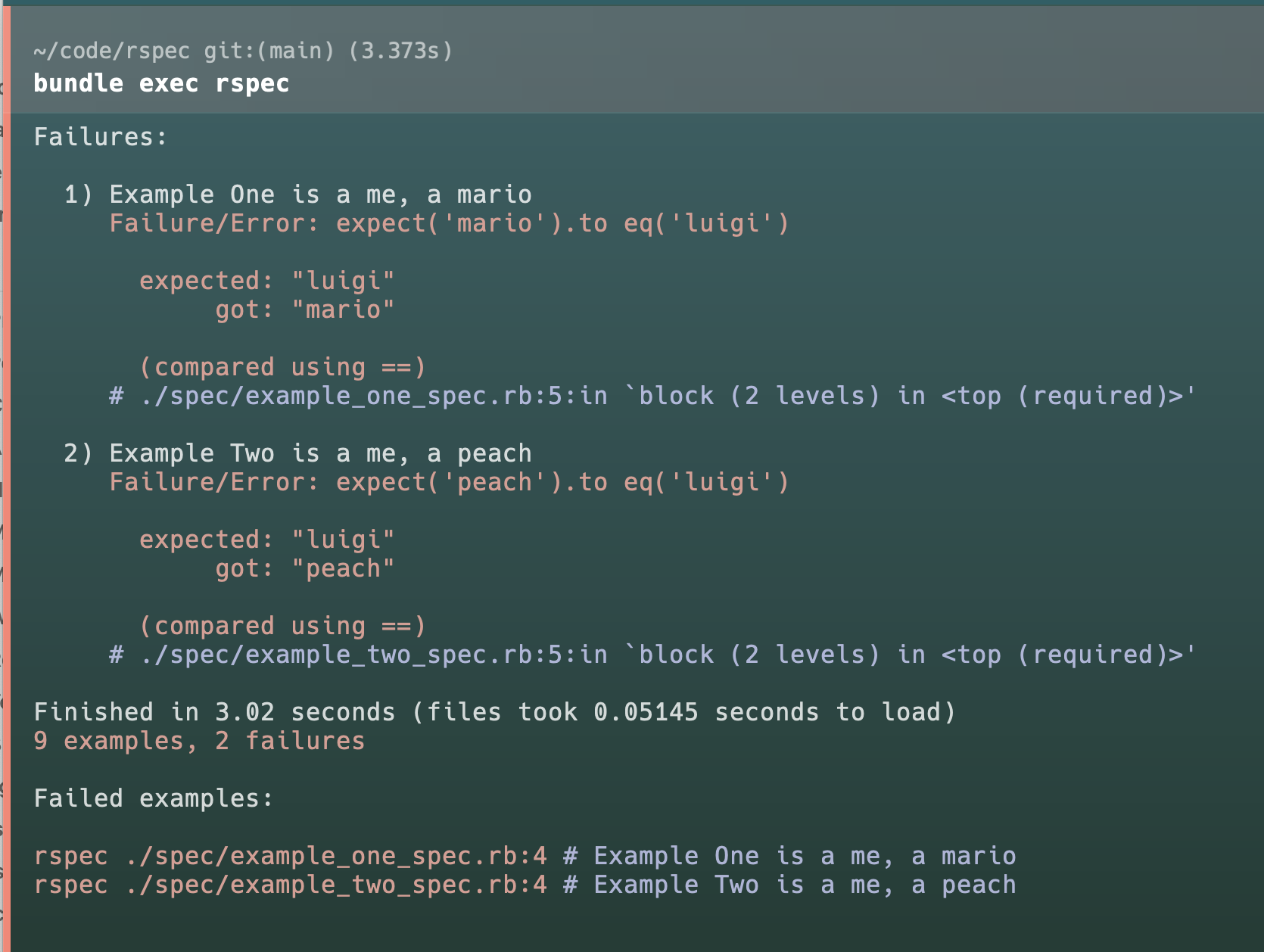

Another challenge lies in the wide range of test frameworks available to software engineers. Frameworks vary drastically in their output and capabilities. There are few things more frustrating than going from a test framework in one language that has a great result format to a test framework in another language that… doesn’t. For example, check out the (great) output that RSpec has versus the (dreadful) output of xUnit. Here’s a sample RSpec report adapted from our Captain example for RSpec:

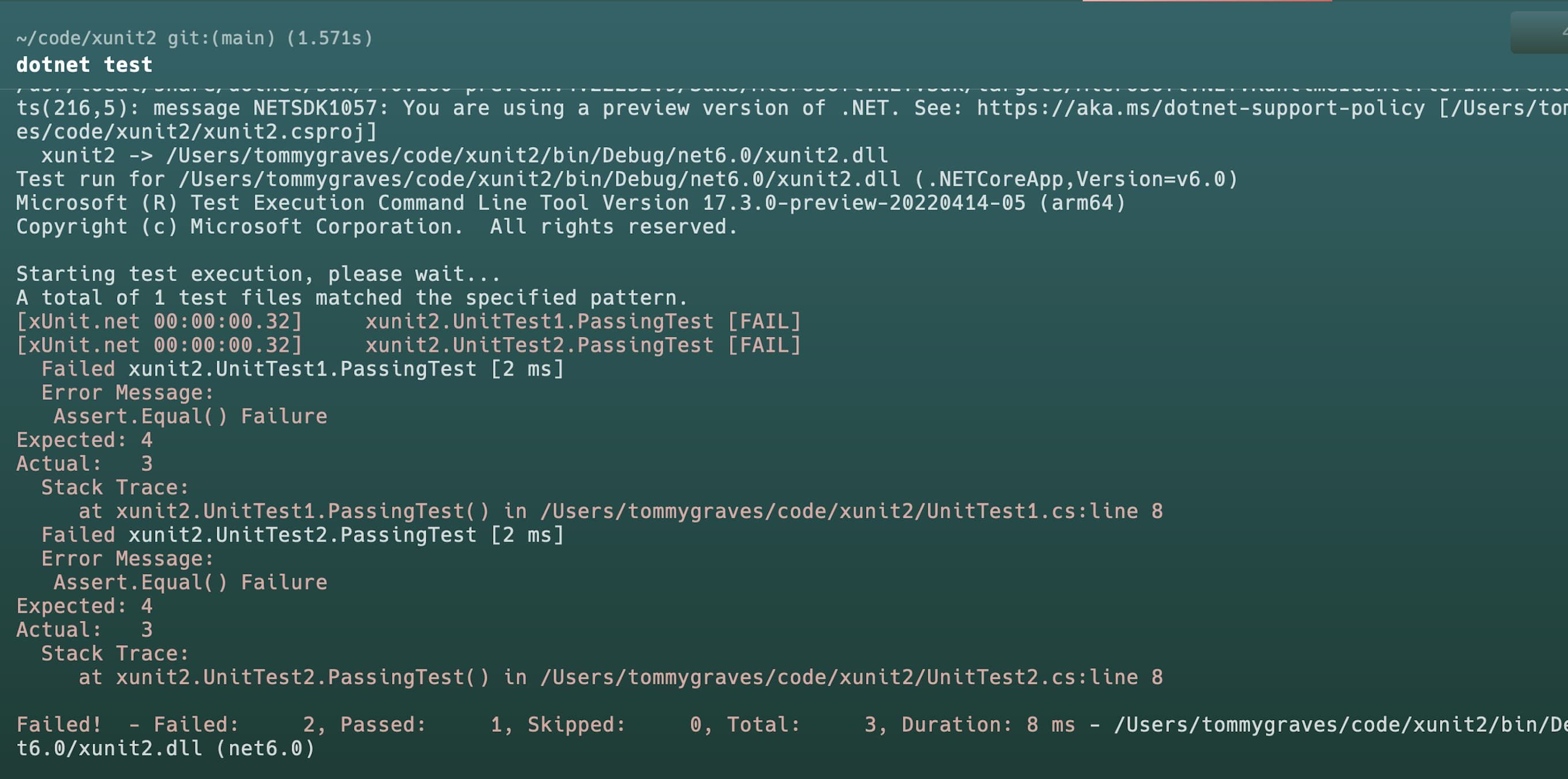

And here’s some sample xUnit results from our Captain example for xUnit

Notice how much better the RSpec output is organized, how the most important information (what tests failed) is at the end, and how effectively colors are used to highlight different details!

There aren’t good reasons why test frameworks couldn’t produce output like RSpec’s (or better!), but for many test frameworks, interacting with results seems to be a second-class concern.

#Formatters are underutilized

Many test frameworks allow creating richer test results via results formatters. Unfortunately, formatters are often underutilized, and the bespoke nature of each framework’s formatters means that great formatters in one toolchain aren’t useful to other toolchains.

Many frameworks provide DX-focused formatters, such as HTML reports. These strive to present test results in a more visually pleasing and organized manner, yet their utility often doesn't extend far beyond what's provided by console logs. They offer improved readability but often neglect to provide more meaningful data or analysis. And the relative effort required to open an HTML file versus looking directly at the console just isn’t worth it.

Test frameworks also rarely expose the full slate of information about your test results to formatters. For example, test frameworks with built-in retry capabilities rarely report information about retried tests, instead clobbering failures with their subsequent successes. In some cases the native log output has richer information than you can gather from the formatter mechanism!

#We can do a lot better

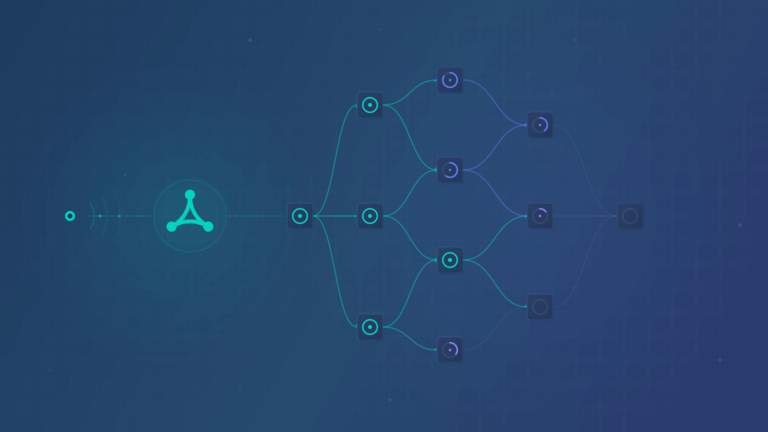

At RWX, we’ve been working on Captain, an open source test supercharger, and improving the test results story is a big part of what we’re trying to do. Captain takes your existing test framework and translates its test results to the RWX test result schema, a universal test result format that caters to the full gamut of test framework capabilities instead of limiting data to the lowest common denominator.

We leverage test result data in this rich programmatic format to dramatically improve the developer experience around test results. Captain displays a unified output of your test results at the end of every test run, and this output is informed by our learnings from the best test frameworks — so even if your test framework has mediocre output, you can use Captain to dramatically improve the way you interact with test logs. Captain also includes a beautiful web UI for when you need to dive deeper into your test results. The web UI lets you sort and filter test results in a variety of ways, so you can, for instance, easily find the slowest tests in your test suite. And in the future, we plan on improving this experience even further by allowing you to view your test results in completely different ways, such as organizing your failures by failure message instead of by test case.

#Looking forward

We're excited about the RWX test result schema because it's portable and detailed, and we think there are a lot of opportunities for platforms to take advantage of the richer data it exposes. We're also building RWX CI/CD, which uses this richer data to surface test results as part of your daily interaction with CI as well as directly inside of your editor.

Related posts

Unlock agent-native CI/CD with the RWX Skill

RWX has launched an official skill for working with the RWX CLI and run definition files.

Trigger runs via Webhook

You can now trigger RWX runs from webhooks from third-party services. This is helpful for using RWX to run custom automation.